Weight-parameterized Residual Neural Networks

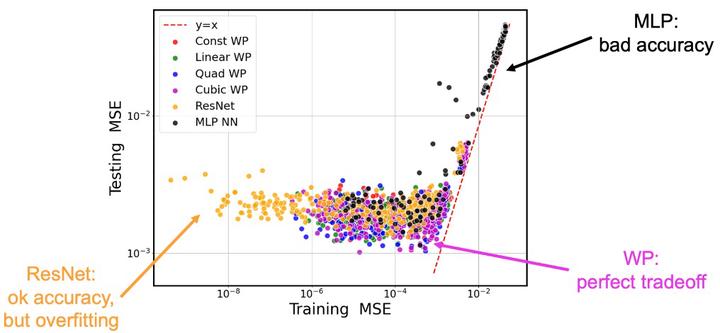

We focus on special NN architectures, residual NNs (ResNets) which include shortcut connections, in the context of NN-based regression for scientific ML. Inspired by the continuous, neural ODE analogy, we develop an approach for ResNet weight matrix parameterization as a function of depth. The choice of parameterization affects the capacity of the network, leading to regularization and improved generalization compared to standard multilayer perceptron (MLP) NNs [1].

Besides, weight-parameterized (WP) ResNets become more amenable to Bayesian treatment due to the reduction of the number of parameters and overall regularization of the loss, or log-posterior, surface. WP ResNets are implemented in have developed a software library Quantification of Uncertainties in NNs (QUiNN) that consists of probabilistic wrappers around PyTorch NN modules.

FigureDemonstration of improved generalization with WP ResNets.

AcknowledgementsThis work is supported by SNL LDRD program.

References[1] O.H. Diaz-Ibarra, K. Sargsyan, H.N. Najm, “Surrogate Construction via Weight Parameterization of Residual Neural Networks”, Computer Methods in Applied Mechanics and Engineering, 433(A), January, 2025.